Hyperparameters

Choices about the algorithm that we set rather than learn

Very problem dependent

Must try them all out to see what works best

Setting Hyperparameters

Idea 1: Choose hyperparameters that work best on the data

BAD: K=1 always works perfectly on training data

Idea 2: Split data into train and test

Choose hyperparameters that work best on test data

BAD: No idea how algorithm will perform on new data

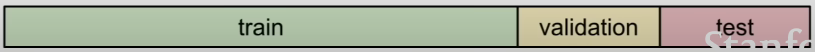

Idea 3: Split data into train, val, and test

Choose hyperparameters on val and evaluate on test

Better!

Do not want to touch test set until actual testing

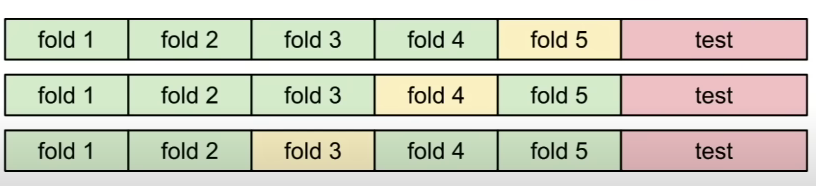

Idea 4: Cross-Validation

Split data into folds, try each fold as validation and average the results

- Useful for small datasets, but not used too frequently in deep learning