Floating Point Numbers

Floating Point Number Systems

Real Numbers

- Infinite in extent

- There exists

such that is arbitrarily large

- There exists

- Infinite in density

- Any interval

contains infinitely many numbers

- Any interval

Floating point systems

- An approximate representation of real numbers using a finite number of bits

Normalized Form of a Real number

After expressing the real number in the desired base

where:

are digits in base - normalized implies we shift to ensure

- exponent

is an integer

Density (or precision) is bounded by limiting the number of digits

Extent (or range) is bounded by limiting the range of values for exponent

for

The four integer parameters

Overflow / Underflow Errors

- If the exponent is too big or too small, our system cannot represent this number

- For underflow, answer rounds to 0

- For overflow, will typically produce a

or NaN

Floating Point Standards

IEEE Single Precision (32 Bits):

IEEE Double Precision (64 Bits):

Floating Point Density

Rounding vs. Truncation

Round-to-nearest

Rounds to closest available number in

- Usually the default

- We’ll break ties by simply rounding

up

Truncation

Rounds to next number in

- Simply discard any digit after

Measuring Error

Difference between

We distinguish between absolute error and relative error.

- Is independent of the magnitudes of the numbers involved

- Relates to the number of significant digits in the result

A result is correct to roughly

Floating Point vs. Reals

For FP system

Machine Epsilon

The maximum relative error,

It is defined as the smallest value such that

We have the rule

For an FP system (

- rounding to nearest:

- truncation:

Arithmetic with Floating Point

What guarantees are there on a FP arithmetic operation?

IEEE standard requires that for

Result is order-dependent; associativity is broken!

Round-off Error Analysis

What can we say about

Let us use

This analysis describes only the worst case error magnification. Actual error could be much less.

Behaviour of Error Bounds

We showed that

The term

Cancellation Errors

When is

- when

- this occurs when quantities have differing signs and similar magnitudes

Catastrophic cancellation occurs when subtracting numbers about the same magnitude, when the input numbers contain error.

- All significant digits may cancel out.

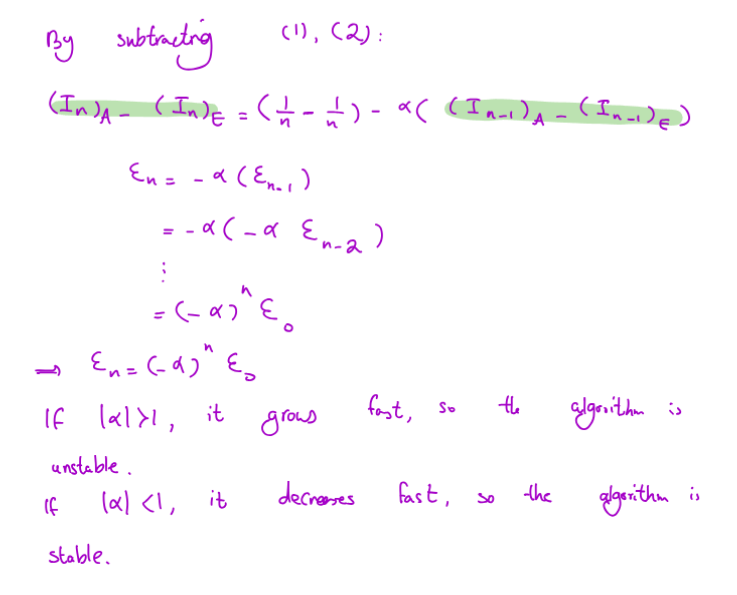

Stability of Algorithms

If any initial error in the data is magnified by an algorithm, the algorithm is considered numerically unstable.

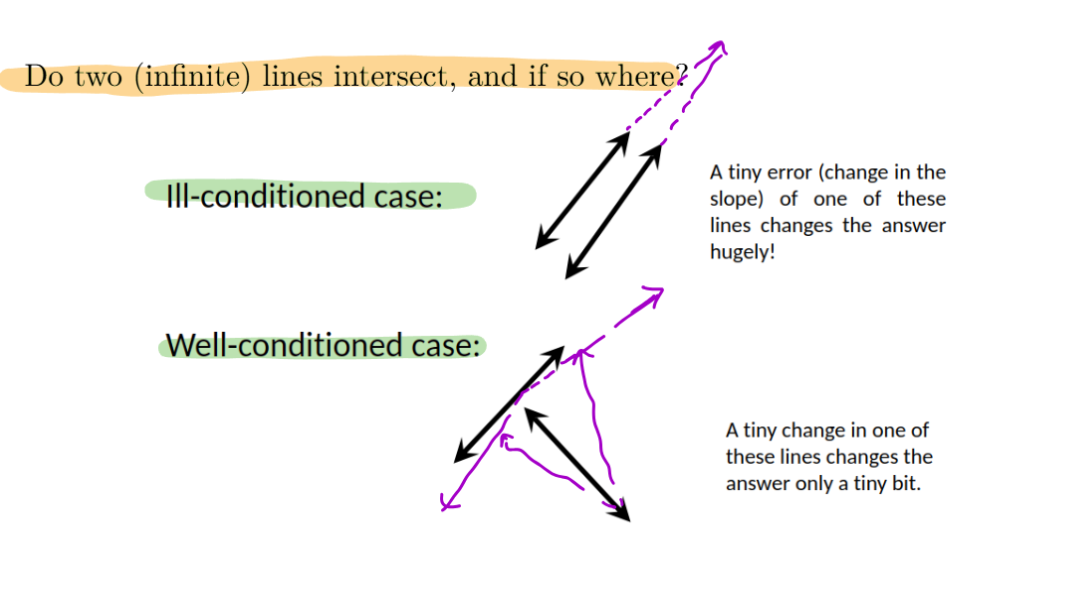

Conditioning of Problems

For problem

- This is a property of the problem itself, independent of any specific implementation.

Conditioning vs. Stability

Conditioning of a problem:

- How sensitive is the problem itself to errors or changes in input?

Stability of a numerical algorithm: - How sensitive is the algorithm to errors or changes in input?

Note that:

- An algorithm can be unstable even for a well-conditioned problem!

- An ill-conditioned problem limits how well we can expect any algorithm to perform

Stability Analysis of an Algorithm

We can analyse whether errors accumulate or shrink to determine the stability of algorithms